Oracle MCP + OCI GenAI: Arquitectura Multi-Agent para Enterprise AI a Escala

Oracle revoluciona el despliegue enterprise de IA con su implementación del Model Context Protocol (MCP) integrado con OCI Generative AI Service, permitiendo arquitecturas multi-agente escalables y seguras para grandes organizaciones.

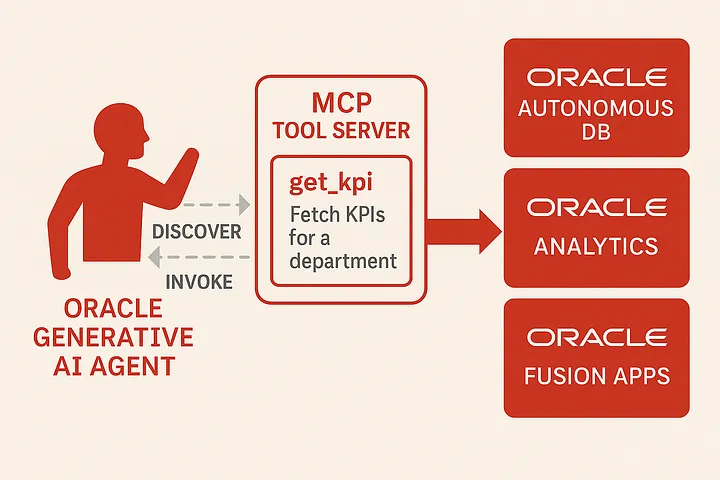

Arquitectura MCP en Oracle Cloud

El Model Context Protocol de Oracle permite comunicación estandarizada entre agentes de IA:

from oci import generative_ai

from oracle_mcp import MCPOrchestrator, Agent

# Configurar orquestador MCP

orchestrator = MCPOrchestrator(

config={

"compartment_id": "ocid1.compartment.oc1...",

"region": "us-ashburn-1",

"security_policy": "enterprise_grade"

}

)

# Definir agentes especializados

data_analyst = Agent(

name="DataAnalyst",

model="cohere.command-r-plus",

capabilities=["sql_generation", "data_visualization", "statistical_analysis"],

tools=[

OracleAutonomousDB(),

OracleAnalytics(),

PandasToolkit()

]

)

security_auditor = Agent(

name="SecurityAuditor",

model="meta.llama-3-70b",

capabilities=["code_review", "vulnerability_scanning", "compliance_check"],

context_restrictions=["no_pii_access", "audit_only"]

)

# Registrar agentes en el orquestador

orchestrator.register_agents([data_analyst, security_auditor])

Protocolo de Comunicación Inter-Agente

# Ejemplo de comunicación MCP

class MCPMessage:

def __init__(self):

self.header = {

"version": "1.0",

"timestamp": datetime.utcnow(),

"correlation_id": str(uuid.uuid4()),

"security_context": self.get_security_context()

}

def create_task_request(self, task_type, parameters):

return {

"header": self.header,

"body": {

"task_type": task_type,

"parameters": parameters,

"constraints": {

"timeout_seconds": 300,

"max_tokens": 4096,

"required_capabilities": ["sql_generation"]

}

},

"routing": {

"strategy": "capability_based",

"fallback_agent": "generalist"

}

}

OCI GenAI Service Integration

1. Model Deployment Pipeline

# Deployment de modelos custom con OCI GenAI

from oci.generative_ai_inference import GenerativeAiInferenceClient

class OCIModelDeployment:

def __init__(self, config):

self.client = GenerativeAiInferenceClient(config)

self.endpoint_id = None

async def deploy_fine_tuned_model(self, model_path, base_model="cohere.command"):

# 1. Upload model to Object Storage

model_url = await self.upload_to_object_storage(model_path)

# 2. Create custom model endpoint

create_endpoint_details = {

"display_name": "custom-enterprise-model",

"compartment_id": self.compartment_id,

"base_model": base_model,

"fine_tuned_model_url": model_url,

"deployment_config": {

"instance_shape": "BM.GPU.A100.4",

"replica_count": 3,

"load_balancer_shape": "flexible"

}

}

response = self.client.create_endpoint(

create_endpoint_details=create_endpoint_details

)

self.endpoint_id = response.data.id

return self.endpoint_id

2. Distributed Agent Orchestration

# Orquestación distribuida con OCI

class DistributedMCPOrchestrator:

def __init__(self):

self.container_instances = ContainerInstanceClient()

self.load_balancer = LoadBalancerClient()

def deploy_agent_cluster(self, agent_configs):

deployments = []

for config in agent_configs:

# Crear instancia de contenedor para cada agente

container_config = {

"image": f"ocir.io/oracle/mcp-agent:{config.version}",

"shape": "CI.Standard.E4.Flex",

"memory_gb": config.memory_requirements,

"cpu_count": config.cpu_requirements,

"environment_variables": {

"AGENT_TYPE": config.agent_type,

"MODEL_ENDPOINT": config.model_endpoint,

"MCP_REGISTRY": self.registry_url

}

}

deployment = self.container_instances.create_container_instance(

container_config

)

deployments.append(deployment)

# Configurar load balancer para distribución de requests

self.configure_load_balancer(deployments)

return deployments

Casos de Uso Enterprise

1. Sistema de Análisis Financiero Multi-Agente

# Pipeline de análisis financiero con múltiples agentes

financial_analysis_pipeline = MCPPipeline(

name="quarterly_financial_analysis",

agents=[

Agent("DataCollector", capabilities=["api_integration", "web_scraping"]),

Agent("FinancialAnalyst", capabilities=["ratio_analysis", "forecasting"]),

Agent("RiskAssessor", capabilities=["var_calculation", "stress_testing"]),

Agent("ReportGenerator", capabilities=["visualization", "narrative_generation"])

],

workflow={

"steps": [

{

"agent": "DataCollector",

"action": "gather_financial_data",

"outputs": ["raw_financial_data"]

},

{

"agent": "FinancialAnalyst",

"action": "analyze_performance",

"inputs": ["raw_financial_data"],

"outputs": ["performance_metrics"]

},

{

"agent": "RiskAssessor",

"action": "assess_risks",

"inputs": ["performance_metrics"],

"outputs": ["risk_report"]

},

{

"agent": "ReportGenerator",

"action": "create_executive_summary",

"inputs": ["performance_metrics", "risk_report"],

"outputs": ["final_report"]

}

]

}

)

# Ejecutar pipeline

result = await orchestrator.execute_pipeline(

financial_analysis_pipeline,

initial_inputs={"company_ticker": "ORCL", "period": "Q4-2024"}

)

2. Security y Compliance Automation

- Audit trail automático: Cada interacción MCP es logged

- Data residency: Control sobre ubicación de procesamiento

- Role-based access: Políticas IAM integradas

- Encryption in transit/rest: TLS 1.3 + AES-256

Performance y Optimizaciones

1. Caching Inteligente

class MCPResponseCache:

def __init__(self, redis_config):

self.redis_client = Redis(**redis_config)

self.ttl = 3600 # 1 hora

def get_or_compute(self, request, compute_func):

# Generate cache key based on request semantics

cache_key = self.generate_semantic_key(request)

# Check cache

cached_response = self.redis_client.get(cache_key)

if cached_response:

return json.loads(cached_response)

# Compute and cache

response = compute_func(request)

self.redis_client.setex(

cache_key,

self.ttl,

json.dumps(response)

)

return response

2. Autoscaling Basado en Carga

- Horizontal scaling: Agentes stateless para escalado rápido

- GPU autoscaling: Asignación dinámica de recursos GPU

- Regional distribution: Despliegue multi-región para latencia

Monitoreo y Observabilidad

# Integración con OCI Monitoring

from oci.monitoring import MonitoringClient

class MCPObservability:

def __init__(self):

self.monitoring = MonitoringClient()

def track_agent_metrics(self, agent_id, metrics):

datapoints = [

{

"namespace": "mcp_agents",

"compartment_id": self.compartment_id,

"name": "response_time_ms",

"dimensions": {"agent_id": agent_id},

"value": metrics["response_time"],

"timestamp": datetime.utcnow()

},

{

"name": "token_usage",

"dimensions": {"agent_id": agent_id, "model": metrics["model"]},

"value": metrics["tokens_used"]

}

]

self.monitoring.post_metric_data(

metric_data=datapoints

)

Beneficios para Enterprises

- Reducción de costos 60%: Optimización automática de recursos

- Time-to-market 3x faster: Pipelines pre-construidos

- Compliance automático: GDPR, HIPAA, SOC2 built-in

- Escalabilidad ilimitada: De 10 a 10M requests/día sin cambios

La documentación completa y ejemplos están en el Oracle AI & Data Science Blog, estableciendo un nuevo estándar para IA enterprise-grade.